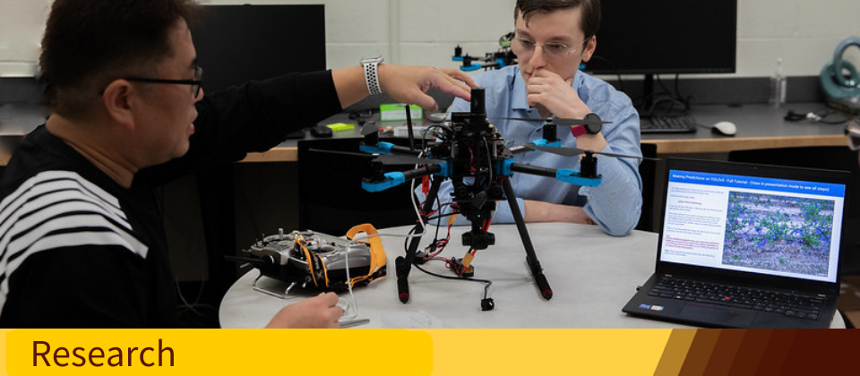

Research

Research

Computer Science Research

Computer Science research is affiliated with the College Office of Undergraduate Research Initiatives (COURI) - an initiative to encourage and facilitate undergraduate students' participation in research. Students will connect with faculty for research and work with them to gain valuable research experience.

See the areas below to learn about research specific to Computer Science.

Student Research

View the research projects, profiles, and accomplishments of our students.

Faculty Research

Learn more about the research activity, publications and grants acquired by our esteemed faculty.

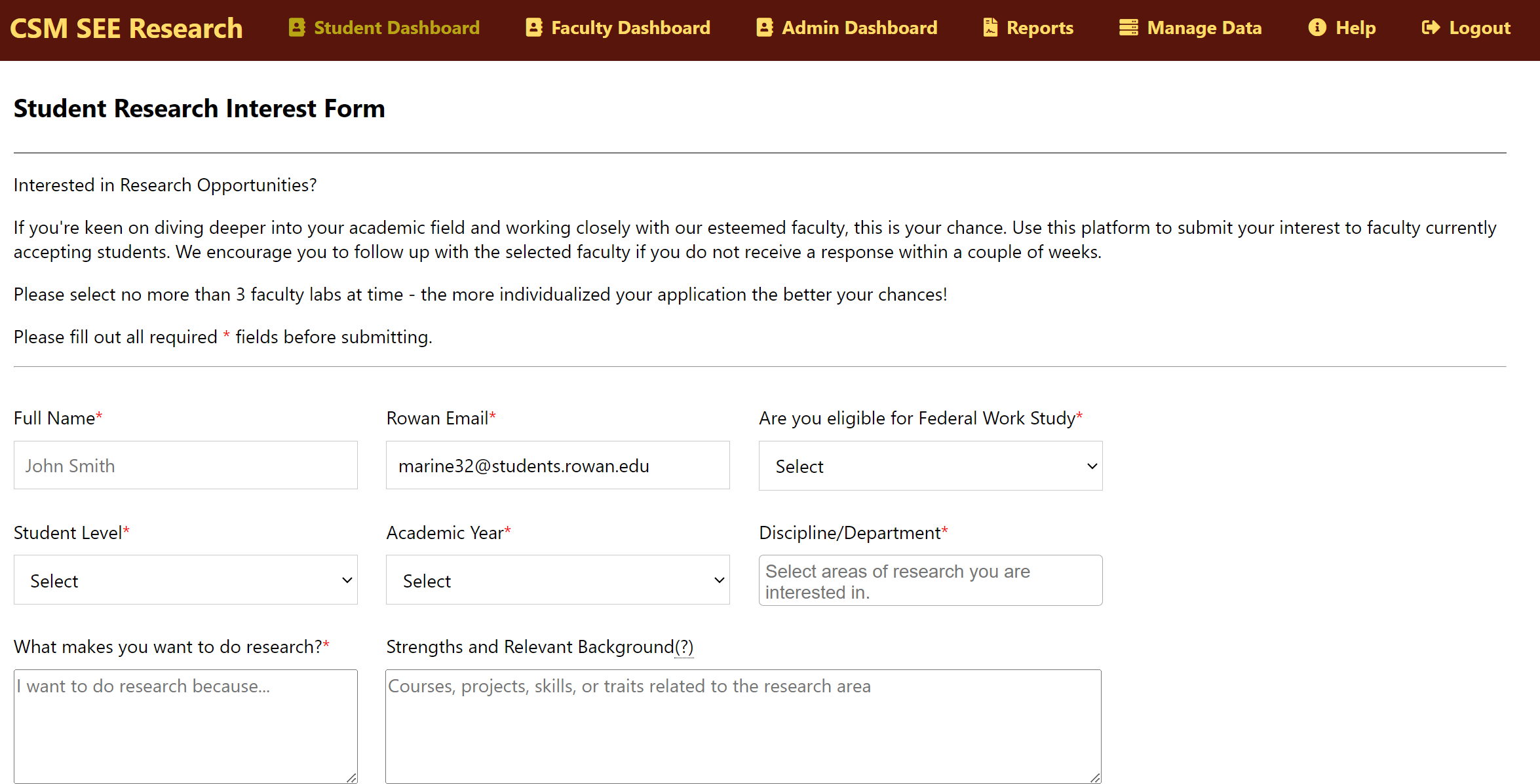

Interested in Research?

Interested in doing research? You can fill out this form to let us know!

Theses & Dissertations

Here is a list of recent Theses and Dissertations made by students in our Masters Degree programs in Computer Science and Data Science.